Balancing Privacy and Security: Google Apple Contact Tracing

00. Introduction

Recently, Google and Apple announced they would begin working together to help slow the spread of COVID-19 by using their respective mobile platforms to help notify users if they have been exposed. They outlined their plans to develop technical solutions, such as an interoperable API for exposure notification using Bluetooth Low Energy, and eventually embedding the notification system into Android and iOS, under the condition that it will eventually be removed once the crisis is over. As of early June, Apple already has support for exposure notification available in their builds of iOS, so the development and deployment of this technology may be even swifter than projected.

The initial launch of the API, aimed for use by governments, universities, and public health care providers, has already begun seeing use. This API will give these entities the capability to use iOS and Android devices for tracking exposure to COVID-19 using an operational framework that is strictly controlled by Google and Apple. The companies advise that no special exceptions will be made to how the system operates at the behest of these groups, and groups will be limited in the number of apps they can register on their own behalf. Later this year, Google and Apple plan to add native support to their respective mobile operating systems, so COVID-19 tracking will be a built-in feature—one that they say will be only temporarily available.

Google and Apple have done a fantastic job so far of keeping their development transparent and community-driven, by releasing both the Bluetooth and cryptographic specifications for review, and after receiving community input and feedback, releasing an updated cryptographic model that took into account many of the concerns that were raised against the original drafts.

In times of crisis, it is not uncommon for people’s privacy to be impinged upon under the banner of security. Some groups, including national governments, have received backlash from security professionals for making their specific tracing apps closed-source, as it prevents people from knowing if the apps are secure and how user privacy is addressed. Other groups, mostly in academia, have been working together to create distributed models for handling COVID-19 tracing and exposure notification, but these solutions tend to be developed slowly and by committee, which ends up sacrificing response time to the crisis at hand.

It is truly difficult to balance these three factors: privacy, security, and time to respond. What’s interesting about Google and Apple’s approach in splitting their work into these two distinct parts, the API and the native support, is that it allows other organizations to decide, although very partially, how they want to balance these things. While Google and Apple will soon handle exposure notification natively on their platform, creating a robust API for semi-public usage helps other organizations speed up their deployment of a contact tracing application and implement methods they see fit for notification of exposure. Privacy protecting measures in the API will make using this information for nefarious purposes difficult, but an organization would still be able to know potentially sensitive information, such as when the user is infected.

In this post, we’ll talk a bit about how Google and Apple’s system works under the hood, and the security considerations that make the solution a good one for preserving user privacy while preventing bad actors from imitating users or faking exposure data.

01. Google and Apple’s Approach

For the sake of brevity, we’ll refer to Google and Apple’s Exposure Notification System as GAENS; and for the sake of ease, we’ll talk about GAENS from the perspective of two users, Bob and Alice, and their respective mobile phones. It’s important to note that this platform is entirely opt-in to help preserve privacy. Both Bob and Alice must opt in for their data to be shared and for this system to work.

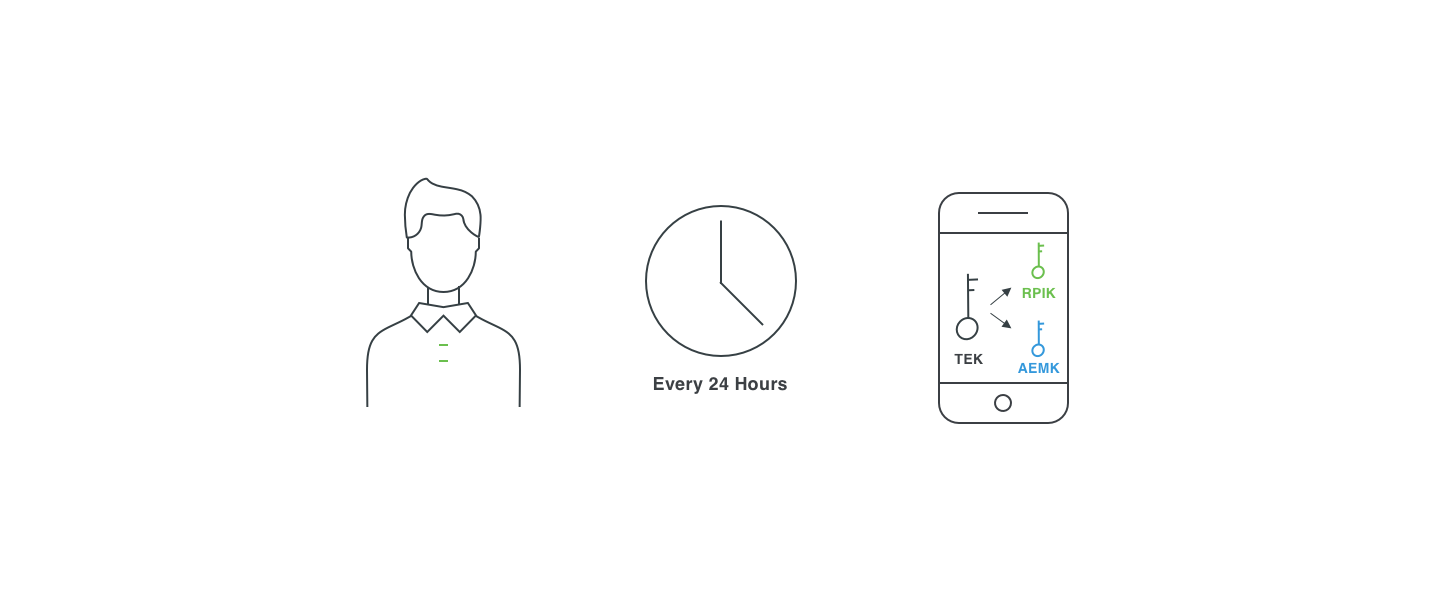

If both Bob and Alice opt in to using GAENS, their devices oversee creating and managing three types of keys throughout a 24-hour period. These keys are used to encrypt and broadcast information over Bluetooth, so when Alice comes into proximity of Bob, her mobile device will store Bob’s broadcasted info, and vice versa.

The life cycles of the keys used by Bob and Alice’s devices fall into two intervals: daily events, which occur once in a 24-hour block, and events that occur roughly every 15 minutes. Additionally, there is an interval at which Bob and Alice’s devices will check in with a Diagnosis Server to see if they have potentially been exposed. We’ll break down the interval periods along with what happens in the event of a positive COVID-19 diagnosis below.

02. Once A Day

Each day, while GAENS is active, Bob’s mobile device generates a new Temporary Exposure Key, a Rolling Proximity Identifier (RPI) Key, and an Associated Encrypted Metadata Key. The Temporary Exposure Key is used to create the other two keys, and will be the keys most used during this period. All three of these keys will be only valid for Bob for the next 24 hours, and are used to derive the rest of the identifying data that will be broadcasted by Bob’s device to Alice’s device over Bluetooth for those 24 hours.

The keys are generated to be 16 bytes in length and are cryptographically random numbers, which will help prevent Bob and Alice from generating identical keys while also not taking up large amounts of space on a modern mobile device. As long as Bob remains healthy, the daily temporary exposure keys will remain stored securely on his device. However, if Bob is diagnosed as positive for COVID-19, they may be released in order to notify others they’ve potentially been exposed.

03. Once Every 15 Minutes

Roughly every 15 minutes, when the Bluetooth MAC address is rotated on iOS and Android devices, Bob’s phone will produce a RPI based on the daily RPI Key and also produce Associated Encrypted Metadata.

The associated metadata is encrypted by both the Associated Encrypted Metadata Key and RPI Key, it contains (and is currently limited to) the transmission power of the Bluetooth signal for better distance approximation and the current version number of the protocol. This data and the identifiers are then associated with an interval of the day. In the GAENS system, the day is divided into 144 10-minute intervals, and the interval in which the keys are created will be used as a timestamp for the keys. These interval numbers will only come into play if the owner of the device is diagnosed with COVID-19, but can be used by devices to re-create these identifying keys when provided with a Temporary Exposure Key.

Throughout the day, Bob’s phone will broadcast the RPI and its associated metadata. If Alice is ever in proximity of Bob, her device will pick up and store Bob’s anonymized info when it is broadcasted.

04. In the Event of a Positive Diagnosis

In the GAENS model, the only time that information that may identify a person ever leaves their device is in the event they receive a positive diagnosis of COVID-19 from a healthcare professional.

In our example, when Bob receives a positive diagnosis from his healthcare professional, he has the option to notify Alice and others who may have been in contact with him during the exposure period – typically 14 days. If Bob chooses to do so, his phone will upload the Temporary Exposure Keys for that exposure window to a central Diagnosis Server. In some models of how this could work, Bob and his phone would have to additionally request authorization to upload these keys by his healthcare professional, in order to help provide higher accuracy in the data. It is important to note that Bob’s device will only release the Temporary Exposure Keys from the last 14 days, and not all of Bob’s previous keys, thus limiting the amount of information, albeit anonymized, that Bob exposes.

When Alice’s phone checks in with the Diagnosis Server to see if there are new exposure keys, it will download these keys, as well as keys from other individuals who were also diagnosed.

Let’s assume Bob successfully uploads his keys and has gone home to get some rest, and Alice’s phone has downloaded all the most recent exposure keys from Apple or Google’s Diagnosis Server. Alice’s phone will then use these Temporary Exposure Keys to generate all 144 possible RPIs that could have been broadcasted throughout a given day. Each of these RPIs is checked against the list of RPIs that Alice’s phone has scanned in the past. In this case, since Bob’s Temporary Exposure Key can recreate an RPI that Alice’s phone has stored, there is a match.

Because this information is anonymized, Alice doesn’t know who was diagnosed, only that she was in the vicinity of someone who was recently diagnosed with COVID-19 and roughly how long she was exposed. In addition to generating the RPI, Alice uses the Temporary Exposure Key to generate the correct Associated Encrypted Metadata Key. She then uses this key and the RPI to decrypt the metadata that was received by Bob’s phone at the time of exposure. This metadata gives her additional information about how strong the Bluetooth signal was, giving her an approximation as to how close she was to the person who was diagnosed with COVID-19.

All of this information gives Alice context as to whether she should get tested.

05. Security Considerations and Concerns

There are two areas of concern when it comes to GAENS and other proximity tracing solutions. The first set of concerns are impersonation and replay attacks. In an impersonation attack, another user within the system has an identical Temporary Exposure Key to another user, either through malicious means or lack of randomness in the key length, to potentially create the same RPIs, and is recognized as another user by devices.

In a replay scenario, an attacker could capture and repeat an RPI in an attempt to trick nearby user devices into believing they’ve been in contact with the user whose key was being falsely broadcasted. The other concern is third parties correlating RPIs of a given user with their actual identity, often called a “correlation attack.” Google and Apple have gone through serious lengths to prevent people or organizations from doing this through the GAENS model.

Replay and Impersonation Attack Mitigation

In the GAENS model, the chance of impersonation by an attacker without the user releasing their temporary exposure keys is “computationally infeasible,” as Google and Apple put it. It is so improbable, that even if a potential attacker did try to attempt to guess a user’s rolling temporary exposure key, in an attempt to generate user-impersonating rolling identifier keys, they would be unable to find it before the exposure key was invalid. This is in part because the temporary exposure key is long in length, 16 bytes, and completely random. Replay attacks, if attempted, are incredibly hard to accomplish, and extremely difficult to be targeted against a certain individual since the keys are generated randomly.

If an attacker was to attempt a replay attack by re-broadcasting another user’s Rolling Proximity Identifier that they found in the wild, the identifier would only be valid for around 10 minutes, since it is generated specifically for one of the 144 intervals it creates. Using a stale RPI would be easily dismissible, and additionally will only need to be handled if the replayed user ever tests positive for COVID-19.

The shear improbability of guessing a TEK and the lack of effectiveness by replaying a user’s RPI means that replay and impersonation attacks are not likely threats when it comes to GAENS.

Correlation Attack Mitigation

It is becoming increasingly common for retail shops and other public venues to track foot traffic and customer location with Beacons, and many phone applications already track user location as well, albeit anonymized to a certain degree. This information, scrubbed of directly identifying information such as your name, the app you were using, or your phone’s Media Access Control (MAC) address, is then made available for purchase via a location data broker. While this data is anonymized, it could be used in conjunction with other private and public data to correlate the RPI to a specific user, although Google and Apple are making correlating this data extremely difficult. The RPIs, being completely random, are virtually impossible to correlate on their own, and even with outside corollary data, such as that from an outside broker, could never be tied to a specific device.

06. Other Solutions and Centralization vs. Decentralization

Generally, the three names we see being used for frameworks currently within the space are Proximity Tracing, Contact Tracing, and Exposure Notification. Originally, Google and Apple launched their framework as a “contact tracing” platform, although they were quick to redefine it as an “Exposure Notification” system, a more apt term for what it accomplishes. But, GAENS isn’t the only method that is being developed. There are a couple other systems going by other names. Academic groups are tending to use the terminology “proximity tracing,” with the Decentralized Privacy-Preserving Proximity Tracing, or DP-3T, and Pan-European Privacy-Preserving Proximity Tracing, or PEPP-PT, being the two leading solutions academia is developing. While these solutions have some similarities in architecture, one of the most important differences in the DP-3T and PEPP-PT systems is the support of both centralized and decentralized data collection.

In a centralized model for a COVID-19 tracing application, all proximity data generated by users would be stored in a central storage location controlled by the application provider. All three frameworks will support decentralized storage, although the DP-3T authors outlined a centralized solution and PEPP-PT authors say they will support both, which means users’ proximity data will not be stored in a centralized server, but instead stored only on the users’ mobile devices. Supporting one format over the other comes with trade-offs, the primary one being a question of “with whom do you want to place trust?” In a centralized approach, where proximity data and exposure notification data is managed by a service provider, you have to trust that the service provider is responsibly protecting user exposure data, and also notifying potentially exposed users.

On the other hand, in a decentralized approach, users need to place trust in other users in the system, whose devices, rather than a central operator, are in charge of responsibly broadcasting potential exposure data to everyone else. In this system, you run the risk of people potentially “spoofing” other users and you could have a user maliciously expand or contract their proximity to other users, but this type of replay attack is mitigated by GAENS. In both cases, data is assumed anonymized, since user identifying tokens are randomly generated, however in the centralized case you would have to also trust that the central service provider is not “de-anonymizing” that data by associating it with a user or their device in other ways.

There are other security concerns associated with centralization, and the DP-3T document does a good job of addressing most of the security concerns and considerations between the different approaches. Google and Apple remain adamant that a decentralized system is the most effective, and we’ve outlined how this approach works.

07. Moving Forward

While it’s still early in the development process, it’s encouraging to see organizations like Apple and Google working together to transparently create a proposal that aims to realize the benefits of contact tracing in a privacy preserving way. Google and Apple, especially within the last few years, have been striving to promote user privacy and security as core requirements for their products.

In addition to building their own proposal for contact tracing, both companies announced that contact tracing mobile apps distributed through their app stores cannot track the geolocation of users. In this case, it’s clear that both Google and Apple are focused on maintaining user privacy both through the implementation of their exposure notification framework as well as through sound policy.

Balancing the benefits of contact tracing with user privacy is a hard thing to get right, but we’re excited to see organizations both from the industry and academia working together to push things in the right direction.